Ollama

Learn how to self-host an LLM with Ollama.

Self-hosting LLMs is currently a preview feature available on the Pro plan. Upgrade to Pro from the dashboard and join the wait-list if you’d like early access.

Squadbase lets you self-host LLMs with Ollama. Select the model you want from the dashboard, deploy it, and Squadbase issues an API-ready URL that you can call directly from Python (or any HTTP client).

Self-hosting an LLM

Deploy an LLM

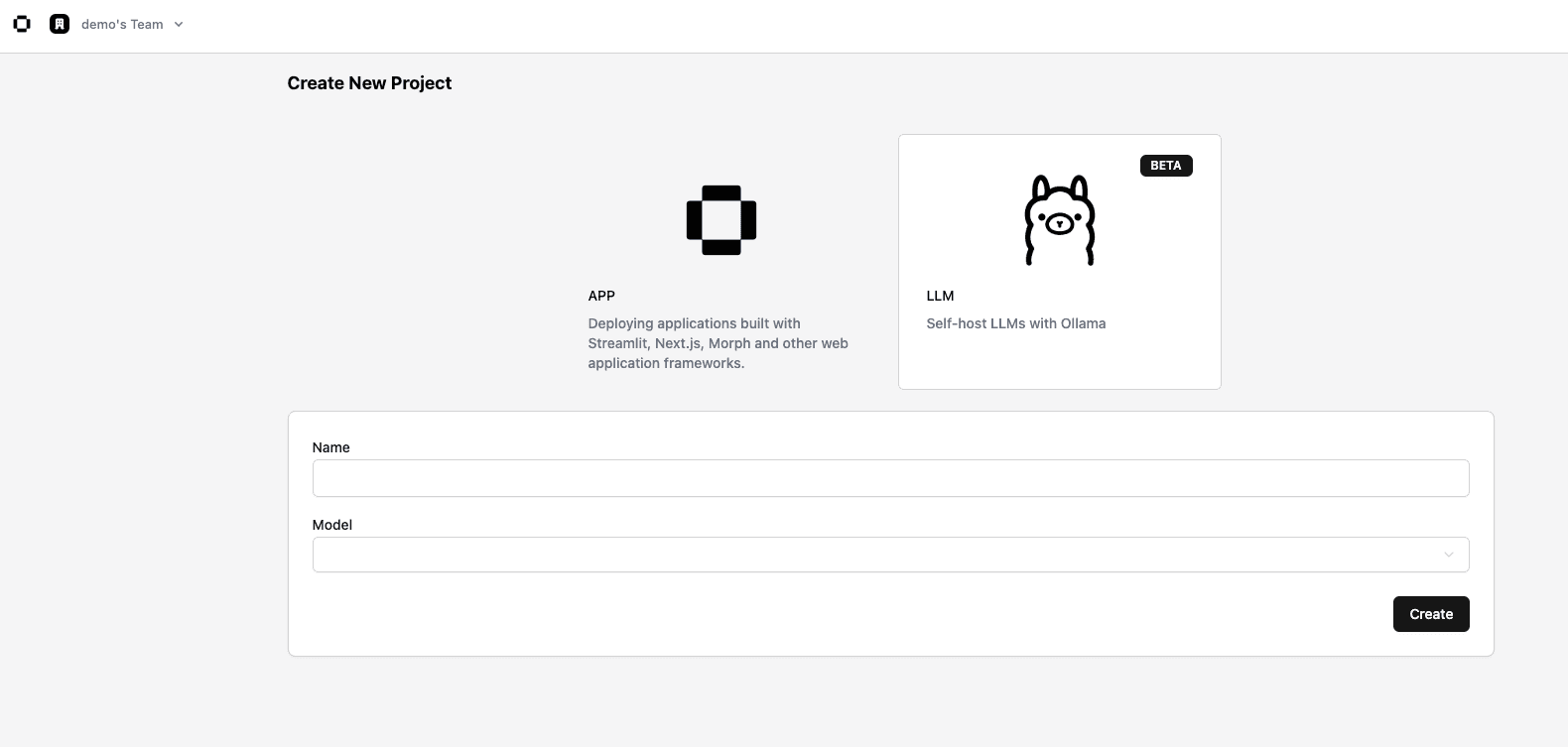

Go to the LLM tab on the dashboard to open the Create LLM screen.

Enter any LLM Name and choose a Model Name.

Currently available models:

| Model | Parameters |

|---|---|

deepseek-r1 | 1.5 b, 7 b, 8 b, 14 b |

llama3.2 | 8 b |

phi4 | 14 b |

qwen | 0.5 b, 1.8 b, 4 b, 7 b, 14 b |

Verify the model

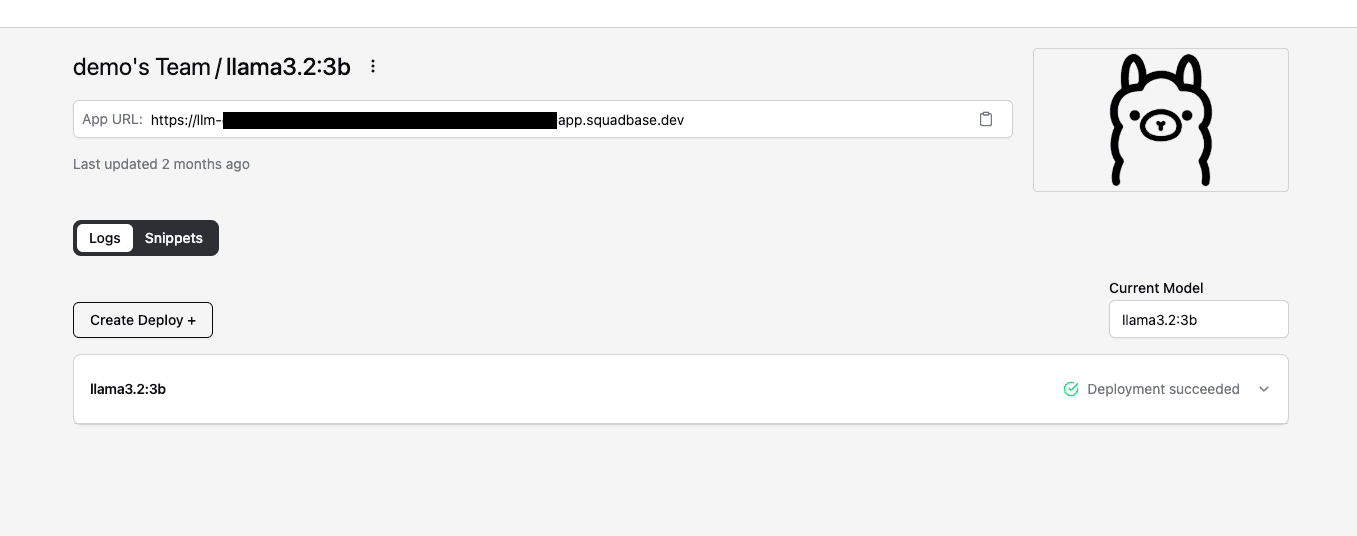

Select your newly created LLM under the LLM tab.

In Logs, the status should read Deployment Succeeded when the model is ready. If it’s still in progress, wait until deployment finishes.

The App URL is the endpoint Squadbase hosts for your LLM. Combine this URL with your Squadbase API Key to send requests.

Send a request

Use the App URL and your API Key to query the LLM.

Below are Python and cURL examples.

For Python, install the langchain-ollama package.

pip install langchain-ollamapoetry add langchain-ollamauv add langchain-ollamafrom langchain_ollama import ChatOllama

llm = ChatOllama(

model="{MODEL_NAME_YOU_DEPLOYED}",

base_url="{YOUR_SQUADBASE_LLM_APP_URL}",

client_kwargs={

"headers": {

"x-api-key": "{YOUR_SQUADBASE_API_KEY}",

}

},

)

for token in llm.stream("Hello"):

yield token.contentcurl --location '{YOUR_SQUADBASE_LLM_APP_URL}/api/chat' \

--header 'Content-Type: application/json' \

--header 'x-api-key: {YOUR_SQUADBASE_API_KEY}' \

--data '{

"model": "{MODEL_NAME_YOU_DEPLOYED}",

"messages": [

{ "role": "user", "content": "Hello" }

]

}'